"The temporal and semantic structure of dynamic conversational facial expressions"

(DFG: 2012-2015)

Humans can and do actively use their faces to convey a wide-range of information on a daily basis. Much of the previous research into facial expressions, however, has focused on a very small subset of expressions, using a few of the so-called “universal” expressions. Little to nothing is known about how the vast majority of facial expressions are produced or perceived. Moreover, nearly all research on facial expressions has used static stimuli, despite the fact that in real-life our faces are rarely static. Recent research has shown that dynamic facial expressions are recognized more readily and easily than static expressions, and have some form of information that is not available at any given instant.

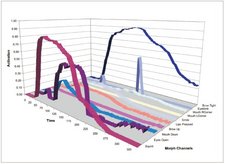

The overarching aim of this project is to provide a precise characterization of the structure of dynamic facial expressions. To accomplish this, three parallel lines of research will be conducted ranging from a characterization of low-level motion information to an elucidation of the higher-level semantic structure underlying the facial expression space.